Let’s face it.

Software testing is complicated. Especially, if you are new, you may get lost and don’t know what testing activities you should focus on to succeed in testing career…and I agree.

However, believe it or not, software testing is all about bugs. Sooner or later, you will realize most of your daily testing activities will be built around these two main activities: defect detection and defect prevention. That being said, if you can put most of your effort on these two activities, you are putting yourself in a position to achieve success. In this post today, I’d like to explain more about these two activities: what they are, why you need them and what to do with them.

First things first,

What is a software bug?

Simple. It’s a bug in software 😀

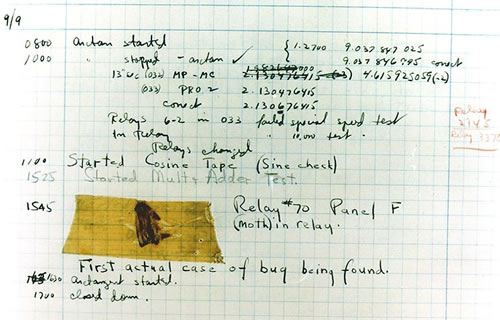

Don’t know what it is? It’s the first “real” bug found.

At 3:45 p.m, September 9, 1947, Grace Murray Hopper records the first computer bug in her log book as she worked on the Harvard Mark II. The problem was traced to a moth stuck between a relay in the machine, which Hopper duly taped into the Mark II’s log book with the explanation: “First actual case of bug being found.”

Seriously, according to Wiki: a software bug is an error, flaw, failure or fault in a computer program or system that causes it to produce an incorrect or unexpected result, or to behave in unintended ways.

There’s no single definition of software bug. James Bach has his own definition of bugs: A bug is anything that threatens the value of the product. Something that bugs someone whose opinion matters.

There are more definitions you can find on the Internet, but basically, a bug or a defect is anything does not work as expected in the system.

Why software has bugs?

Glad you asked!

There are various reasons. SoftwareTestingHelp provides a list of 20 reasons why software has bugs. Out of which, these are the most important reasons:

- Missing requirements or misunderstanding the requirements (e.g.: changed requirements are not tracked or managed properly, requirements are unclear or ambiguous, requirement are not testable, etc)

- The complexity of software: With the advancement of technology, software these days are not just a simple website or a standalone desktop application. Software these days is getting more and more complex when it needs to handle a large amount of data, integrate third-party modules and run cross-platform. Things like IoT, Big Data, Machine Learning, AI, etc..you name it. As a result, developing and testing such system is really challenging

- Time pressure to release: In order to deal with the competitive market, software these days are put under pressure to release to market as soon as possible. Naturally, when release time is shrunk, testing time and communication are cut, things are done in hurry and consequently, errors happen and bugs are introduced.

Some may say, what about programming errors, do they still play their parts? Well, I don’t think so. These days, with the support of advanced software development tools, programming errors are getting less and less. Also, with programming practices, coding errors are not as much as before.

Those are common sources of software bugs.

“But, does this have anything to do with defect detection?”. Yes, it does. Knowing the source of defects will help you direct your testing effort or where you can find the defects the most.

Let’s get into the meat of the post.

Software defect detection

In other words, how to find bugs in software?

When it comes to software testing skills, finding bugs is still one of the most crucial skills. To some extent, a great tester is often a tester who can find the most important bugs in the system. The more important bugs you can find, the better you are doing a good job . Of course, there are many other skills to make you become great testers, but finding bugs is still a vital skill for testers.

There are two main approaches to find bugs in the system:

- Scripted testing

- Exploratory testing

Scripted testing:

In this type of testing, you are expected to run through a list of scripted test cases or checklist to verify the system against defined requirements and confirm if the requirement is passed or failed. Even though scripted testing is a must-check in testing, I’m afraid that testers cannot detect many bugs by just executing scripted test cases.

Why is that? The reason is that such scripted test cases are designed based on critical paths of the system and because they are critical paths, developers often take care of them very well.

Please also note that I’m not saying that bugs found by executing test cases are not valuable, in contrast, bugs found on critical paths are considered the highest priority. What I’m trying to say is that if you are following the same path again and again, there are less chance for you to discover new defects.

Now, if you want to find lot more bugs, I’d suggest you go this path:

Exploratory testing:

When it comes to exploratory testing, James Bach and Michael Bolton are real experts. Michael Bolton has even come up with a mnemonic for exploratory testing: FEW HICCUPPS which is considered as “go-to” guideline for testers when they want to find bugs in the system. I’ll explain what FEW HICCUPPS are:

Familiarity. We expect the system to be inconsistent with patterns of familiar problems.

Explainability. We expect a system to be understandable to the degree that we can articulately explain its behaviour to ourselves and others

World. We expect the product to be consistent with things that we know about or can observe in the world

History. We expect the present version of the system to be consistent with past versions of it

Image. We expect the system to be consistent with an image that the organization wants to project, with its brand, or with its reputation.

Comparable Products. We expect the system to be consistent with systems that are in some way comparable.

Claims. We expect the system to be consistent with things important people say about it, whether in writing (references specifications, design documents, manuals, whiteboard sketches…) or in conversation (meetings, public announcements, lunchroom conversations…).

Users’ Desires. We believe that the system should be consistent with ideas about what reasonable users might want.

Product. We expect each element of the system (or product) to be consistent with comparable elements in the same system.

Purpose. We expect the system to be consistent with the explicit and implicit uses to which people might put it.

Statutes. We expect a system to be consistent with laws or regulations that are relevant to the product or its use.

What it means is that if any of points above does not meet expectation, you can claim as “it’s a bug“.

But it doesn’t just stop there. If you want to find more bugs, be creative, think outside of the box and build your own heuristics.

Bug’s priority and severity

But not all bugs are equally valuable, some are more important and worth fixing than others

Let’s assume, there are two testers:

Tester A: Found 5 bugs in a build

Tester B: Found 20 bugs in a build

Which testers are better? Well, we don’t know, there’s not enough data to tell.

Now what if:

Tester A: Found 5 bugs in a build and most of them make system crashed

Tester B: Found 20 bugs in a build and most of them are trivial bugs and no one cares to fix them.

Obviously, Tester A did a better job than Tester B.

As a tester, your goal is not just to find bugs. Your goal is to find important bugs that worth fixing. In other words, do not focus on quantity, quality of your defects matter.

Related read: Priority vs Severity

How to report a bug..the right way?

So you found a defect, great! The next (yet important) step is to report them.

“Hey, I found a bug, take a look”

Sadly, most testers overlook this step. They write poor defect reports that fail to describe the problems clearly. Such poorly written defect reports make all their finding defect efforts wasted.

Please note that as a tester you are not only doing testing, but you are doing a service. Your service is to provide as much information as possible about the system under test to your customers/stakeholders.

Pay as much as attention to the bug report as bug finding.

Related read: How to write effective bug report

How to know if you are doing a good job at defect detection?

The good news is that you can base on metrics to help you measure the effectiveness of defect detection.

There are different metrics to measure the effectiveness of defect detection, but the two popular ones are:

Defect Detection Percentage:

The defect detection percentage (DDP) gives a measure of the testing effectiveness. It is calculated as a ratio of defects found prior to release and after release by customers

Formula: DDP=Number of defects at the moment of software version release / Number of defects at the moment of software release + escaped defects found

Example:

- Number of defects found in Testing phase: 60

- Number of defects found after release: 20

DDP = (60/(60+20))*100= 75%

Defect Leakage Rate:

Defect Leakage Rate gives a measure of how well testers can detect defects based their missed bugs (a.k.a: bugs found by customer). It is calculated as a ratio of number defects found by customer and number of defects found by testers.

Formula: DLR=number of defect found by customers after release/number of defects found by testers before release

For example: If after release client found 9 defects, during the test, tester reported 201 defects and from these 20 defects were invalid, then

Defect Leakage = (9/201-20)*100=4.9%

Like I said above, the good news is that we have metrics to base on…and here’s the bad news:

Metric is misleading and it will become more harm than good if you solely rely on one metric to make decisions without considering context.

Let’s get into the second part of the post:

Software defect prevention

What is defect prevention and why we need it?

Note: This part is a bit advanced for new tester. However, if you can apply defect prevention practices in your work, you will bring yourself to next level 😉

So, what is defect prevention?

Defect prevention is a strategy applied to the software development life cycle that identifies root causes of defects and prevents them from occurring

But you may ask “Why we need it? I thought as a tester, my job is to find as many bugs as possible. If we prevent them, what left to find?”

It makes sense but not entirely true.

When I first started, I have the exact thinking. I thought I were cool when I could find a lot of bugs in the system. Some of my bugs crashed the system and make developers embarrassed sometimes.

I felt like this:

…but over time, the excitement is not as high as before. It’s not that I’m not proud of good defects I found, it’s just that I realize defects are not that cool.

I’ll explain…

Finding defects is good, the more you can find the better for your product. However, according to Lean Manufacturing, defect is one of 7 wastes of lean. The problem with this waste is its entailed cost. In manufacturing, these costs include rework, scrapping, material, rescheduling material, etc. If you are in the manufacturing industry, you will know that eliminating the waste is the priority.

Now in the software industry, the cost of software defects may not be as clear as in manufacturing, but it’s still a waste.

Look! when you found a defect, the team will spend time on reviewing and making decision on what to deal with it. If team agree to fix it, it will cost time and resources to fix it. Once developers provide the fix, testers need to verify the fix and perform regression test. Clearly, it will always cost more time and resource to handle the defects.

With that being said, it doesn’t mean you should not find defects or just hide them just to reduce the cost. No, it’s not what I mean. What I mean is that if you could help prevent the defects from occurring in the first place, that would be very very valuable.

So, how to prevent it?

Basically, defect prevention includes activities that *prevent* defects from being introduced into the software from the first place. Here are some ideas:

- Analyze and review requirements: This is one of the most important practices in defect prevention activities because most of defects are introduced in software due to unclear, untestable requirements. Analyzing and reviewing requirements will eliminate the risks to introduce such defects

- Code review (including peer review, self-review): This will reduce the defects related to algorithm implementations, incorrect logic or certain missing conditions.

- Root cause analysis: Analyzing the root cause of the defect to prevent it from happening again (5 whys, Ishikawa diagram)

There are some more practices, but those are most important ones.

As you may see, some of the practices are not for testers. However, you can always provide your inputs in those activities. With that said, testers need to be involved from the beginning and throughout the software development phases.

“Zero defects” is a myth.

Now, it seems that if you can follow all good practices of defect detection and defect prevention, there should be no defect leaked to customer right? Sadly, it’s not. Disregard of how well you detect bugs or prevent them, there’s no way for you to prevent or detect all bugs in the system and bug missing is part of testing game. You can’t just remove them entirely. You can just reduce the number of missed bugs (that are less important) and prepare a good attitude to deal with them.

Sometimes, all you need to do is:

Leave a Reply